AI in the Cradle: How Algorithms Offer a Hands-Off Approach to Newborn Health Monitoring

The old adage “they grow up so fast” is never truer than during a child’s first year of life. Babies roughly triple their weight by the time they reach their first birthday—a rate unmatched for the rest of their lives. From motor control to pattern recognition to visual development, the infant stage is teeming with neuro- and physiological changes. It’s also extremely difficult to study.

Whether in a hospital or home setting, newborn care is, quite understandably, wrapped in privacy and security. It makes gathering data pretty hard. To study, say, motor function, researchers have to rely on volunteers to supply data about infant poses, postures, or actions—the kind of information needed to gauge developmental progress. Not only does this arrangement mean that signs of developmental problems can go unnoticed, it also means studies of infant populations tend to lack sufficient data.

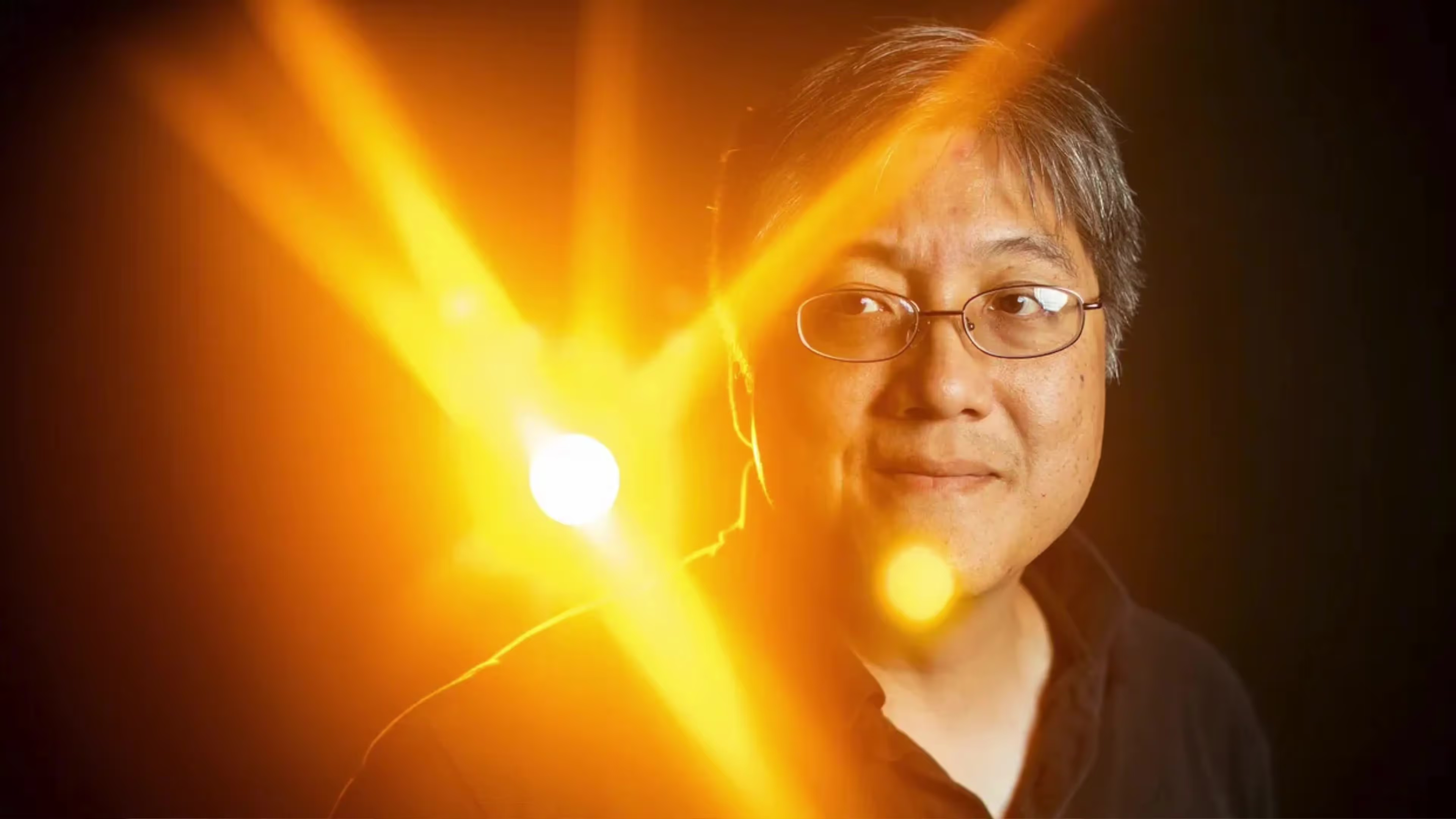

But what if there were a way to collect some of that data without intruding upon or interfering with newborn care? What if the early signs of a neurodevelopmental disorder could be detected, tracked, and observed by medical professionals in the infant’s home? For Sarah Ostadabbas, a core faculty member at the Institute for Experiential AI, that kind of intervention is only possible with AI.

The Hands-Off Approach

Sarah works at the intersection of computer vision, machine learning, and pattern recognition. Much of her career has been dedicated to developing vision-based AI algorithms that enable long-term monitoring of human and animal behaviors with the aid of these tools.

“My infant study is centered on the question of,” she says, “is it possible to provide a series of AI driven-tools that are accessible and allow for monitoring, tracking, and quantification of a specific developmental condition from everyday videos?”

Judging by some of her funding sources, the answer is a resounding yes. Last year, she received a CAREER award from the National Science Foundation (NSF) to investigate the use of computer vision and machine learning in identifying early signs of autism in infants. Other supporters of her computer vision work include Amazon, Oracle, NVIDIA, Verizon, Biogen, and the U.S Department of Defense. And just last month, one of her papers was accepted at MICCAI ‘23, the International Conference on Medical Image Computing and Computer Assisted Intervention.

Key to her research’s appeal is her hands-off approach. Collecting data through consumer devices like phones and baby monitors leaves families undisturbed while also allowing her team to cast a wider net when it comes to collecting population data.

“The whole idea is to keep the baby where they are,” says Sarah, who is also an associate professor in the department of electrical and computer engineering at Northeastern University (NU) and director of the Augmented Cognition Laboratory. “Whether they are at home or in the NICU, the system can be brought to them and be installed as easily as setting up a webcam.”

The idea is to take video captured from baby monitors, nanny cams, webcams, or phones, feed it through a series of computer vision algorithms to detect certain patterns about infant mobility or behavior, and then inform pediatricians, clinicians, parents, or other decision-makers where necessary.

“I don't want to claim any health-related decision making here,” she adds. “Our algorithms just extract patterns from long videos for quantifying and scoring an infant's motor quality, and then the pediatrician can look at the results and say, ‘OK, this is alarming’ or ‘this is normal.’”

Partnering up for Infant Care

In addition to motor function, Sarah is involved in a collaborative project to monitor a specific infant behavior known as non-nutritive sucking (NNS). An important indicator of nervous system development, NNS is defined as any kind of sucking action a baby performs when a finger, pacifier, or other object is placed in their mouth; said another way, it’s sucking without any nutrient delivery.

NNS data—such as frequency, amplitude, and number of cycles—provide physicians with important clues about feeding readiness, especially in preterm infants, as healthy NNS has been shown to reduce lengths-of-stay for infants born prematurely. The problem is that the prevailing method for measuring NNS is really subjective: just a finger-in-the-mouth assessment. Sensor-based alternatives aren’t much better, relying on pressure transducers in the pacifier that can impact the baby’s natural sucking patterns.

To find a solution, Sarah teamed up with Emily Zimmerman, an associate professor and department chair in the Bouvé College of Health Sciences at Northeastern. Together, they are building a contactless NNS measurement tool that makes use of recorded video to analyze facial muscle signals and model sucking behavior. The work uses a sensorized pacifier that Emily developed but aims to make the whole system fully contactless.

“Emily and I came together to see if we can make a scalable solution that is unobtrusive and can be used everywhere,” Sarah says. “And what is better than a vision-based system that doesn’t touch anything? You just record a two-minute video of the infant with their own pacifier and then the system spits out the quality of the sucking.”

The Future of Infant Care

Sarah and Emily Zimmerman, who is also a faculty member at the Institute, have been publishing work in this area for the last few years. Their recent MICCAI paper, which describes NNS detection and segmentation research, marks their admittance to one of the most prestigious conferences covering the overlap between AI and health.

The two are also hard at work getting a company off the ground to scale the technology as a marketable medical device. The spin-off startup is called NeuroSense Diagnostics and has already received several seed fundings.

Uniting Sarah’s many ongoing projects is a desire to innovate in spaces that are sparse on data. She calls them “small data domains,” and they’re of critical importance not just in the fields of AI and data science, but in healthcare and medicine as well. The sensitive care environment of a newborn child is a perfect example, with AI presenting an incredible opportunity to improve and broaden pediatric care everywhere.

Click here to learn more about Sarah’s research, or watch a video summarizing her AI work in infant care.