Expert Insights on Responsible AI Solutions for Healthcare: Best Practices for Implementation

Overview

Responsible AI solutions for healthcare are paramount in today's evolving landscape. By implementing ethical frameworks that prioritize safety, transparency, and accountability, we can significantly improve patient outcomes. Establishing governance frameworks, conducting regular audits, and engaging stakeholders are best practices that foster trust and mitigate biases in AI applications. These measures not only enhance the effectiveness of healthcare delivery but also build a foundation for responsible innovation. To ensure success, healthcare administrators must actively engage with these strategies, recognizing the profound impact they can have on patient care.

Introduction

In the rapidly evolving landscape of healthcare, the integration of Artificial Intelligence (AI) presents a double-edged sword. While AI holds the promise of revolutionizing patient care, it also raises critical ethical concerns that cannot be overlooked. Responsible AI in healthcare is not merely a technological advancement; it is an ethical imperative that demands a framework grounded in safety, transparency, and accountability.

As healthcare providers and organizations strive to harness the power of AI, they must navigate a complex web of challenges, from addressing biases to ensuring compliance with evolving regulations. This article delves into the principles of Responsible AI, best practices for implementation, and the pivotal role of education and training in fostering a culture of accountability. By prioritizing ethical considerations, the healthcare sector can pave the way for a future where AI enhances, rather than compromises, patient care.

1. Understanding Responsible AI in Healthcare

Responsible AI in medicine encompasses a moral framework that directs the creation and implementation of AI technologies, emphasizing safety, transparency, and accountability. This framework is essential for fostering trust between individuals receiving care and their providers, ultimately leading to improved outcomes for those individuals. Key principles of Responsible AI include fairness, explainability, and alignment with human values, ensuring that technology enhances rather than undermines patient care.

In 2025, expert opinions underscore the necessity of integrating moral considerations into AI systems. For example, a significant portion of medical professionals recognizes the critical need to address racial and ethnic biases in AI applications. Recent statistics reveal that 64% of Black adults and 42% of Hispanic adults perceive racial bias as a pressing issue in medicine, compared to only 27% of White adults. This disparity highlights the urgent need for responsible AI solutions in healthcare to undergo rigorous testing for biases that could result in unequal treatment outcomes. Healthcare administrators and practitioners must prioritize these ethical frameworks as they integrate such solutions into their operations.

By leveraging advancements in AI, such as those demonstrated in our case study with Santovia, which utilized LLMs and Retrieval Augmented Generation, medical entities can transform access to personalized health information and enhance diagnostics and treatments. This not only aligns with the overarching goal of improving care and safety but also illustrates how responsible, human-centric AI can revolutionize engagement. Notably, organizations that adopt Responsible AI practices are likely to experience a positive impact on care outcomes, as these frameworks foster a more equitable and effective environment.

Instances of principled AI frameworks in medical settings include initiatives focusing on transparency in AI decision-making processes and the establishment of guidelines for accountability in AI applications. These frameworks not only direct the responsible use of AI but also serve as a foundation for building trust with individuals and stakeholders. As the medical landscape continues to evolve, the commitment to responsible AI solutions for healthcare will be vital in navigating the complexities of AI implementation and ensuring that technology serves the best interests of individuals.

Learn more about how we assisted Santovia here.

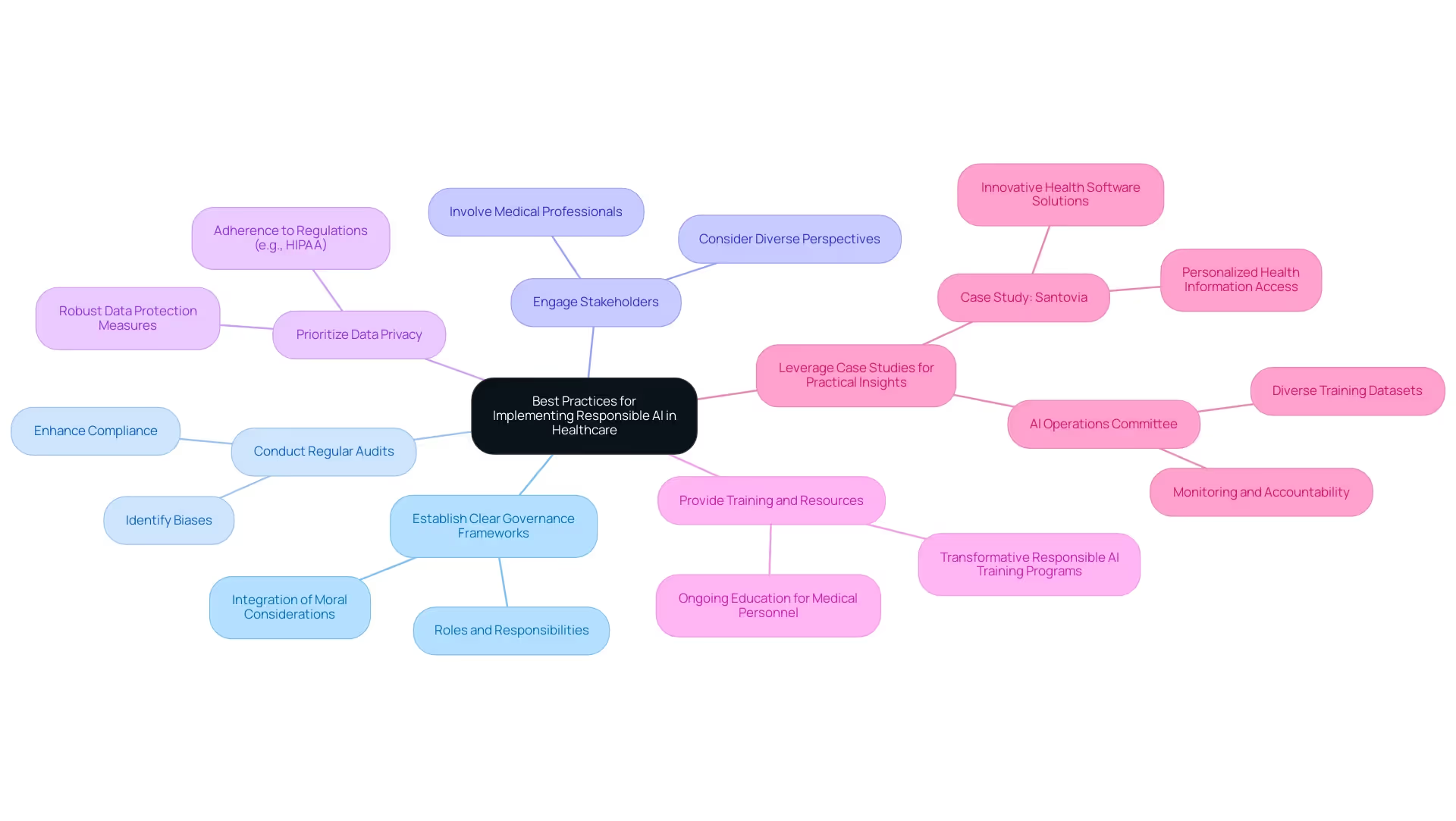

2. Best Practices for Implementing Responsible AI

Implementing Responsible AI in healthcare necessitates a structured and strategic approach, encompassing several best practices that prioritize moral standards and patient safety.

- Establish Clear Governance Frameworks: Organizations must develop comprehensive governance structures that delineate roles and responsibilities for AI oversight. This clarity fosters accountability and ensures that moral considerations are integrated into every stage of AI deployment.

- Conduct Regular Audits: Routine audits of AI systems are essential for identifying biases and ensuring adherence to ethical standards. These audits not only enhance compliance but also build trust among stakeholders, reinforcing the integrity of AI applications in healthcare.

- Engage Stakeholders: Actively involving medical professionals, individuals, and ethics in the AI development process is crucial. This engagement guarantees that diverse perspectives are considered, ultimately enhancing the relevance and effectiveness of AI systems in addressing real-world healthcare challenges.

- Prioritize Data Privacy: Robust data protection measures must be implemented to safeguard individual information. Adherence to regulations like HIPAA is essential, as it protects individual privacy and promotes trust in AI technologies.

- Provide Training and Resources: Ongoing education for medical personnel on AI tools and moral considerations is essential for successful implementation. The Institute for Experiential AI offers transformative responsible AI training programs for leaders, equipping professionals with the necessary knowledge and skills to utilize AI technologies responsibly and effectively. Learn more about our Responsible AI for Leaders: Executive Education course.

- Leverage Case Studies for Practical Insights: The recent case study on Santovia illustrates how innovative health software solutions can transform access to personalized health information through responsible AI. By analyzing such instances, medical entities can acquire valuable knowledge about optimal methods and the effects of responsible AI solutions for healthcare integration.

The Responsible and Ethical AI for Medical Practice Lab offers insights into applying WHO recommendations in clinical settings, further highlighting the significance of these optimal methods. By following these guidelines, medical organizations can seamlessly integrate AI technologies while maintaining a strong commitment to ethical standards and care. The emergence of federated, agile data management approaches supports real-time decision-making and innovation, aligning with the evolving demands of the medical ecosystem in 2025.

A notable case study highlights the establishment of an AI operations committee that oversees monitoring and accountability of AI systems, emphasizing the importance of diverse training datasets to mitigate bias and maintain patient safety through stringent human oversight. This proactive approach exemplifies the commitment to responsible AI solutions for healthcare, which is essential for navigating the complexities of medical technology. As A.S. pointed out during their consultancy for Curai Health, integrating these practices is crucial for fostering a responsible AI landscape in the medical field.

To empower your organization in overcoming challenges during AI implementation, we encourage administrators in the medical field to engage with us by scheduling a meeting to explore how we can support your AI and data journey.

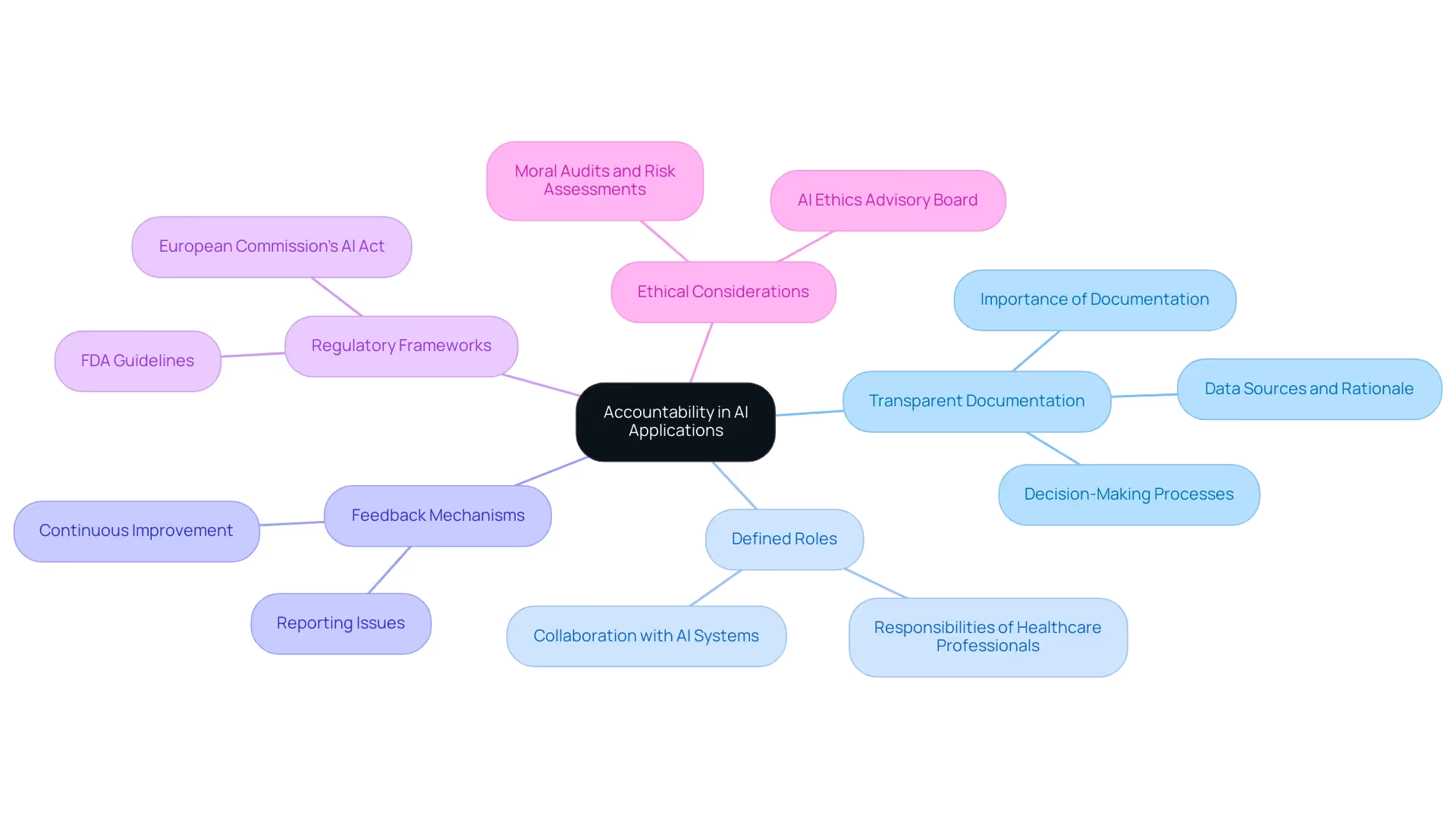

3. The Role of Accountability in AI Applications

Accountability in responsible AI solutions for healthcare is paramount, particularly in medical settings, where AI decisions can profoundly influence patient outcomes. Establishing clear lines of responsibility for AI outcomes is essential for the implementation of responsible AI solutions, ensuring that providers, developers, and entities are held accountable for the decisions made by AI systems. This can be effectively achieved through several key practices:

- Transparent Documentation: Maintaining comprehensive records of AI system development is vital. This includes detailed documentation of decision-making processes, data sources, and the rationale behind algorithmic choices. Such transparency not only facilitates accountability but also builds trust among stakeholders.

- Defined Roles: It is imperative to clearly outline the roles of healthcare professionals concerning AI systems. By ensuring that clinicians understand their responsibilities in monitoring and interpreting AI outputs, entities can foster a collaborative environment where human oversight complements AI capabilities.

- Feedback Mechanisms: Implementing robust systems for reporting and addressing issues that arise from AI applications is essential. These mechanisms allow for continuous improvement and accountability, enabling organizations to learn from AI performance and make necessary adjustments.

The significance of accountability is underscored by evolving regulatory frameworks, such as those from the FDA and the European Commission’s AI Act, which emphasize transparency and accountability for high-risk applications. These frameworks guide the practices outlined above, ensuring that responsible AI solutions for healthcare are developed and implemented.

As we look toward 2025, the integration of AI in healthcare must be paired with robust moral frameworks to prevent harm and promote equity. The AI Ethics Advisory Board, consisting of more than 40 multidisciplinary specialists from academia, industry, and government, offers on-demand, conflict-free guidance customized to organizational needs. This includes services such as moral audits, risk assessments, and the development of responsible AI frameworks.

This expert-led method is crucial for managing the intricacies of AI deployment in medical services and directly impacts accountability practices by ensuring moral considerations are integrated into AI systems from the beginning.

Moreover, as Jeremy Kahn, AI editor at Fortune, notes, "We can kind of rely on some of these professional standard-setting bodies to potentially do some of this work and set standards for, well, what do we want out of these copilot systems?" This underscores the role of established standards in enhancing accountability in AI applications.

By nurturing a culture of accountability and utilizing the expertise of the AI Ethics Advisory Board, medical institutions can enhance trust in AI technologies, ensuring that they implement responsible AI solutions for healthcare to improve patient care.

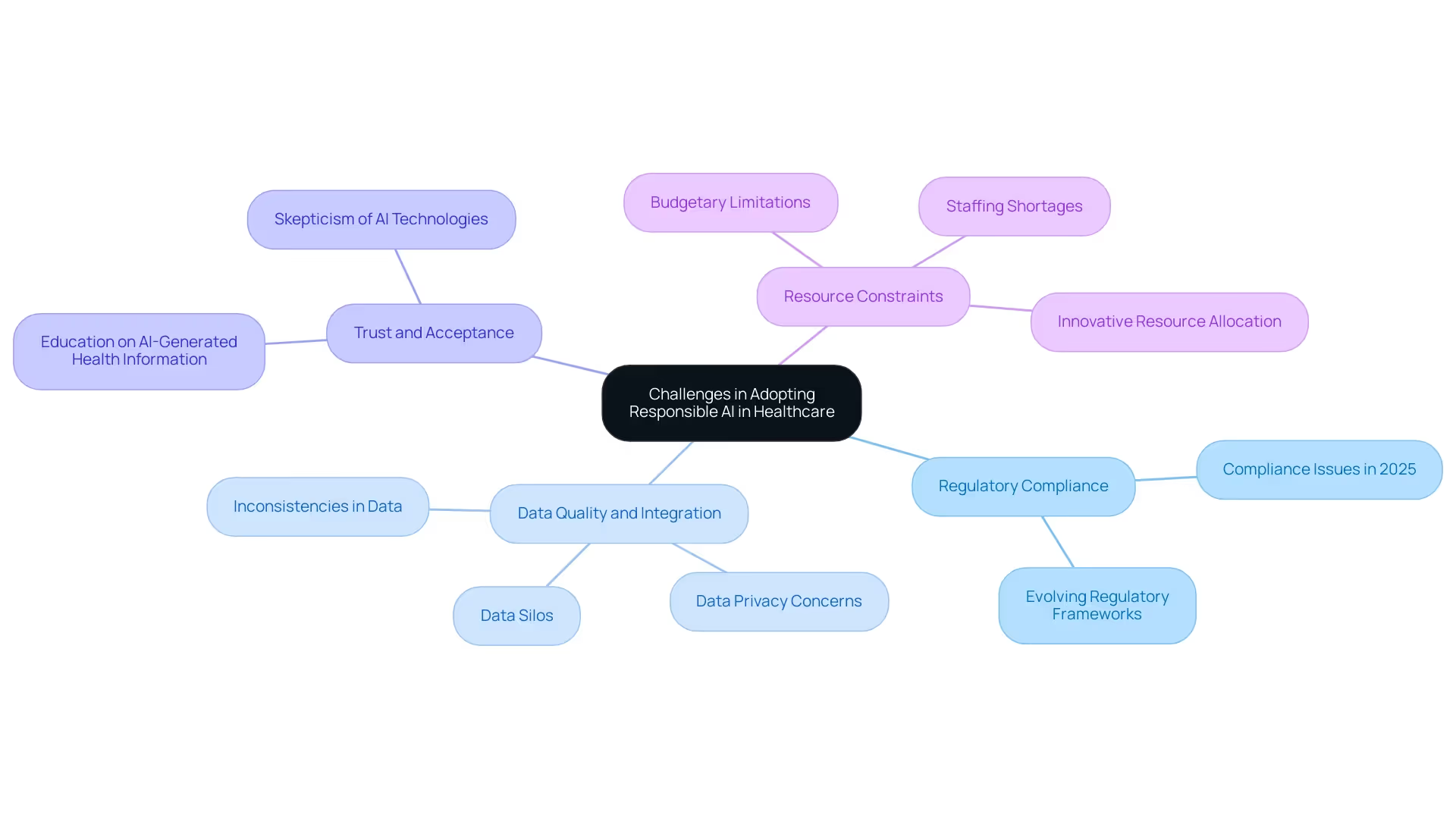

4. Challenges in Adopting Responsible AI in Healthcare

Implementing Responsible AI in medical settings presents numerous challenges that entities must navigate effectively to harness the full potential of these technologies. Key obstacles include:

- Regulatory Compliance: The intricate web of medical regulations poses significant hurdles. Organizations must ensure that their AI systems not only meet legal standards but also adapt to evolving regulatory frameworks. In 2025, compliance issues remain a top concern, with many entities struggling to keep pace with new guidelines.

- Data Quality and Integration: The effectiveness of AI solutions hinges on the quality of the data used for training. However, medical organizations often grapple with data silos and inconsistencies, compromising the accuracy and reliability of AI outputs. Ensuring that data is both representative and high-quality is critical for successful AI implementation. A case study on the challenges of GenAI adoption highlights that addressing data privacy and regulatory compliance is crucial for unlocking the potential benefits of Generative AI in medical operations.

- Trust and Acceptance: Earning the confidence of medical professionals and patients is paramount. Skepticism surrounding AI technologies can impede their adoption, making it essential for entities to foster transparency and educate stakeholders on interpreting AI-generated health information. This approach not only builds confidence but also enhances the overall medical experience.

- Resource Constraints: Budgetary limitations and staffing shortages are prevalent in many healthcare settings, which can hinder the development and deployment of AI solutions. Organizations must find innovative ways to allocate resources effectively to overcome these barriers. As Cem Dilmegani's success with Hypatos demonstrates, entities that prioritize foundational improvements and adopt flexible operating models are more likely to emerge as leaders in this dynamic landscape. Ryan Sousa emphasizes that leveraging analytics and AI to balance efficiency with growth will be key for entities navigating these challenges.

The AI Solutions Hub at the Institute for Experiential AI empowers organizations with responsible AI solutions, upskilling, and expert guidance to ensure sustainable growth and responsible implementation. Addressing these challenges necessitates a collaborative effort among all stakeholders, including policymakers, service providers, and technology developers. By collaborating, they can establish an environment that facilitates the responsible adoption of AI, ultimately resulting in enhanced outcomes for individuals and operational efficiencies. Furthermore, GenAI proof-of-concepts are expected to demonstrate value in the medical field by optimizing diagnostics, patient flow, and administrative tasks, reinforcing the importance of overcoming the outlined challenges.

Through executive education courses on Responsible AI, leaders can acquire strategies, tools, and networking opportunities necessary for implementing responsible AI solutions for healthcare.

Call to Action: To learn more about how the AI Solutions Hub can assist your organization with tailored support, including AI upskilling and responsible AI guidance, please reach out today. Explore our experiential education courses designed to equip leaders in the field with the knowledge and skills needed for successful AI implementation.

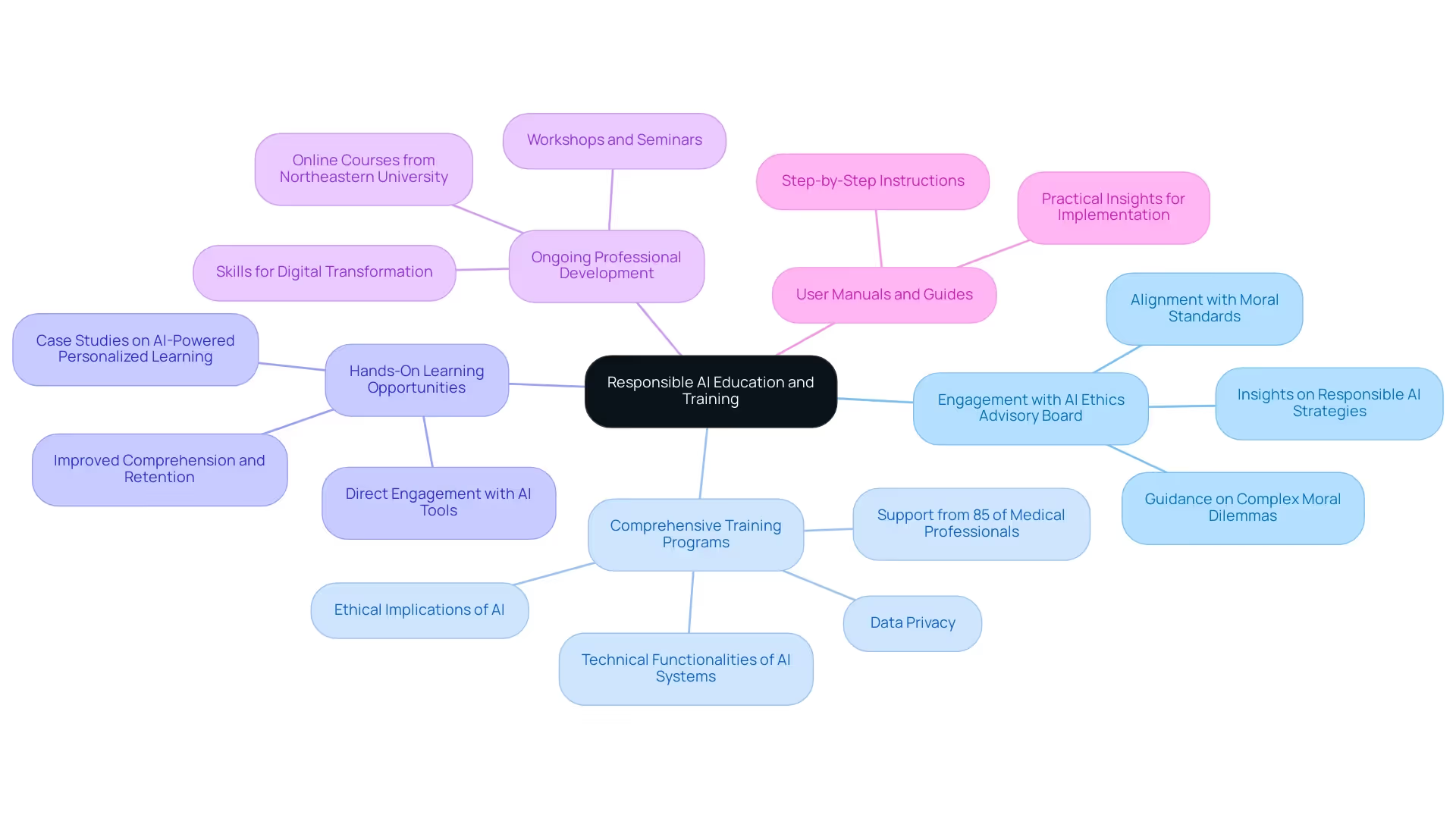

5. The Importance of Education and Training in Responsible AI

Education and training are essential for the successful implementation of responsible AI solutions in healthcare. Organizations must prioritize the following strategies:

- Engagement with the AI Ethics Advisory Board: Collaborating with the AI Ethics Advisory Board offers invaluable insights into responsible AI strategies tailored specifically for the healthcare sector. This engagement assists entities in aligning their AI implementations with moral standards and best practices, ensuring a commitment to responsible AI solutions, as well as transparency and safety. The board provides customized strategies, resources, and guidance on responsible AI practices, helping organizations navigate complex moral dilemmas.

- Comprehensive Training Programs: Establish training initiatives that encompass the ethical implications of AI, data privacy, and the technical functionalities of AI systems. This foundational knowledge is crucial for medical professionals to effectively navigate the complexities of AI technologies. Notably, 85% of medical professionals support national efforts to ensure AI safety and transparency, underscoring the critical need for robust training programs.

- Hands-On Learning Opportunities: Facilitate practical experiences for healthcare professionals to engage directly with AI tools. Such opportunities enable them to grasp the applications and limitations of these technologies, fostering a deeper understanding of how AI can enhance patient care. For instance, case studies on AI-powered personalized learning demonstrate that tailored educational approaches can lead to improved comprehension and retention, reinforcing the value of hands-on experiences in AI education.

- Ongoing Professional Development: Promote continuous learning through workshops, seminars, and online courses, particularly those offered by the Institute for Experiential AI at Northeastern University. These programs focus on responsible AI education, equipping leaders with the necessary skills to navigate digital transformation and generative AI integration. This commitment to professional growth ensures that staff remain informed about the latest advancements in AI and the ethical considerations that accompany their use.

- User Manuals and Guides: Provide access to user manuals and guides that assist entities in their data-driven transformations. These resources offer practical insights and step-by-step instructions for implementing responsible AI practices, ensuring that medical professionals are well-equipped to make informed decisions.

Investing in education and training not only empowers medical entities to utilize AI responsibly but also significantly enhances patient care outcomes. By prioritizing these educational strategies, organizations can cultivate a workforce adept at leveraging AI technologies ethically and effectively. As Carlos Luis Sanchez-Bocanegra, PhD, emphasizes, the integration of ethical training in AI is vital for fostering a responsible approach to technology in the medical field.

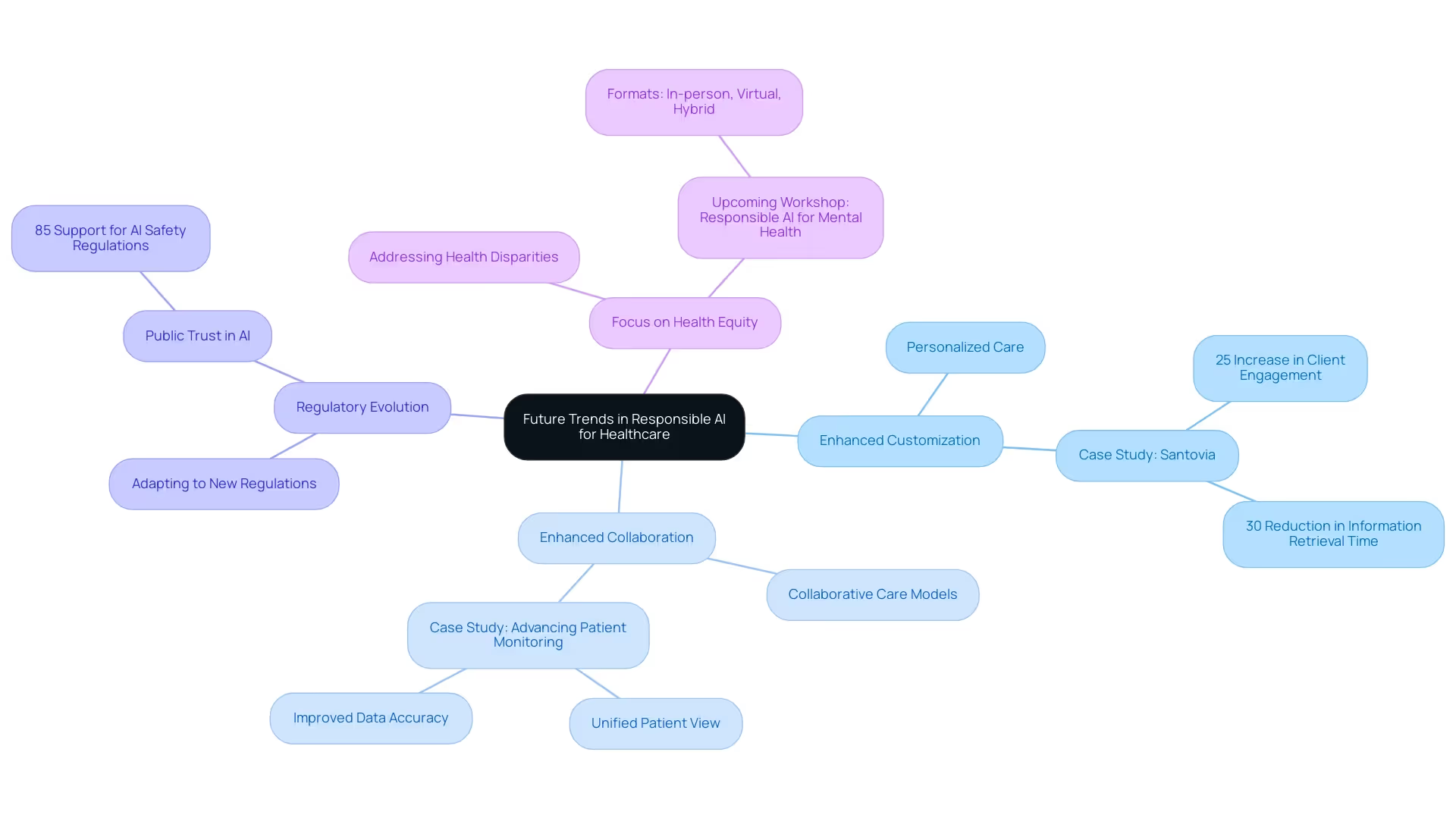

6. Future Trends in Responsible AI for Healthcare

The future of Responsible AI in healthcare is poised for transformative advancements, highlighted by several key trends:

- Enhanced Customization: AI technologies are paving the way for highly personalized care, enabling providers to tailor treatments and interventions to individual needs. By leveraging data-driven insights, practitioners can enhance outcomes and satisfaction, making healthcare more responsive to unique profiles. For instance, studies indicate that adult cancer survivors across a wide range of cancers face a 37% higher risk of developing cardiovascular disease, underscoring AI's potential to address critical health issues through personalized care. A recent collaboration between Santovia, Northeastern University’s Institute for Experiential AI, and Prima CARE demonstrates how AI-powered solutions can enhance patient engagement and healthcare decision-making. The project utilizes Large Language Models (LLMs) and Retrieval Augmented Generation (RAG) to improve access to personalized health information, integrating AI-driven insights into clinical workflows. This initiative reflects the growing role of responsible AI solutions in making healthcare more efficient and accessible, particularly in digital pathology and patient engagement.

- Enhanced Collaboration: The integration of AI with complementary technologies, such as telemedicine and wearable devices, is fostering collaborative care models. This synergy not only streamlines communication among medical teams but also empowers individuals to engage actively in their care, ultimately leading to improved health outcomes.

- Regulatory Evolution: As AI technologies continue to advance, regulatory frameworks will also evolve. Healthcare organizations must remain agile, adapting their practices to comply with new regulations that ensure the safety and efficacy of AI applications. Notably, 85% of respondents support national efforts to ensure AI safety and transparency, emphasizing the importance of regulatory evolution and public trust in AI applications. This proactive approach will be crucial in maintaining trust and accountability in responsible AI solutions for healthcare.

- Focus on Health Equity: A significant trend will be the increasing emphasis on utilizing AI to address health disparities. By ensuring that all populations benefit from technological advancements, providers can work towards a more equitable system, where access to quality care is not determined by socioeconomic status or geographic location. Upcoming events such as the Responsible AI for Mental Health Workshop at the Institute for Experiential AI on April 3, 2025, which will be offered in-person, virtual, and hybrid formats, are crucial in advancing responsible AI solutions for healthcare and well-being.

Staying informed about these trends is essential for organizations aiming to harness the full potential of Responsible AI. By proactively adapting their strategies, they can not only improve patient care but also contribute to a more ethical and equitable healthcare landscape by implementing responsible AI solutions for healthcare.

Conclusion

The integration of Responsible AI in healthcare transcends mere technological advancement; it fundamentally aims to enhance patient care through ethical principles. By establishing a robust framework that prioritizes safety, transparency, and accountability, healthcare organizations can foster trust and improve patient outcomes with AI technologies. Emphasizing fairness, explainability, and alignment with human values is crucial, especially as the sector addresses biases and navigates evolving regulations.

Implementing best practices such as clear governance, regular audits, and stakeholder engagement is essential for effectively navigating the complexities of AI adoption. Organizations must prioritize data privacy and invest in comprehensive training programs that empower healthcare professionals to utilize AI responsibly. The significance of accountability cannot be overstated; well-defined roles and transparent documentation are vital to ensuring that AI applications are employed ethically and effectively.

As we look to the future, trends toward increased personalization, enhanced collaboration, and a focus on health equity will shape the landscape of Responsible AI in healthcare. By remaining adaptable to regulatory changes and fostering a culture of continuous learning, healthcare organizations can harness the transformative potential of AI while upholding their ethical obligations. Ultimately, the commitment to Responsible AI will not only enhance patient care but also pave the way for a more equitable healthcare system for all.