Abstract

Information access – the challenge of connecting users to previously stored information that is relevant to their needs – dates back millennia. The technologies have changed – from clay tablets stacked in granaries to books on shelves arranged according to the Dewey Decimal Classification to digital content indexed by web search engines – but the aims have not. Large pretrained neural models such as BERT, GPT-3, and most recently, ChatGPT, represent the latest innovations that can help tackle this challenge.

Undoubtedly, these models have already had significant impact, but of late, the hype surrounding the latest iterations in my opinion is overblown. In this talk, Professor Jimmy Lin offered his perspectives on the future of information access in light of these large pretrained neural models. He discussed representation learning and different architectures for retrieval, reranking, and information synthesis. Looking backwards historically, it’ll become clear that the vision of effortlessly connecting users to relevant information has not changed, although the tools at our disposal have very much so, creating both tremendous opportunities and as well as challenges.

Flip through the slides from the presentation:

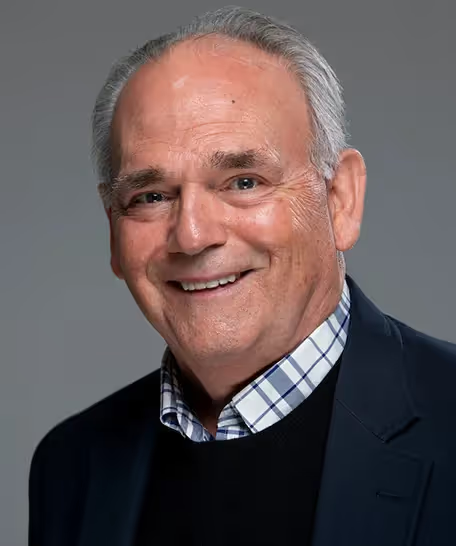

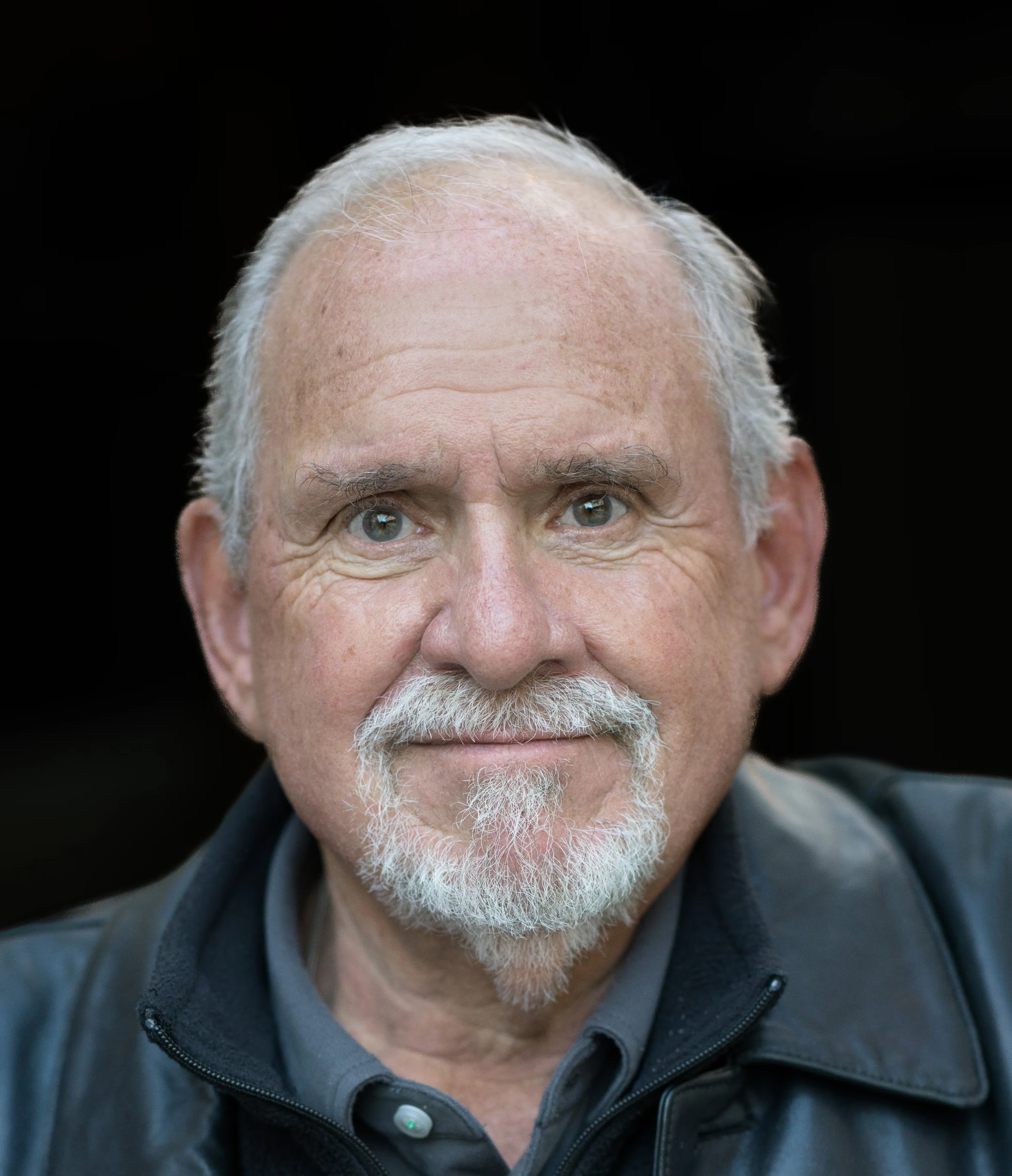

Biography

Professor Jimmy Lin holds the David R. Cheriton Chair in the David R. Cheriton School of Computer Science at the University of Waterloo. Lin received his PhD in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology in 2004. For a quarter of a century, Lin’s research has been driven by the quest to develop methods and build tools that connect users to relevant information. His work mostly lies at the intersection of information retrieval and natural language processing, with a focus on two fundamental challenges: those of understanding and scale.

Keynote and Industry Speakers

Northeastern University Speakers

Agenda

.avif)

%20circ.avif)

.avif)

.avif)

.avif)

-p-800.avif)

.avif)