Computing Systems in the Foundation Model Era

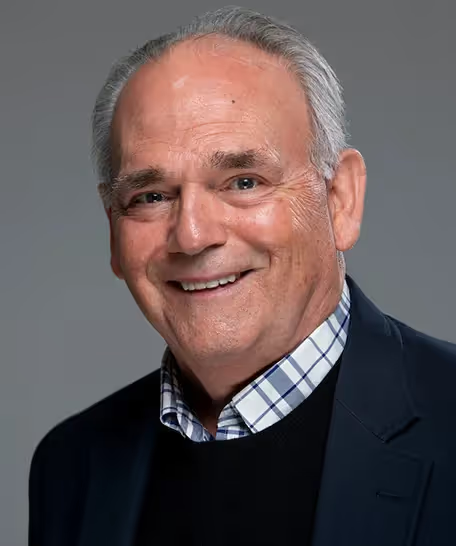

Distinguished Lecturer Seminar with Kunle Olukotun

Abstract

Generative AI applications with their ability to produce natural language, computer code and images are transforming all aspects of society. These applications are powered by huge foundation models such as GPT-4, which have 10s of billions of parameters and are trained on trillions of tokens, have obtained state-of-the-art quality in natural language processing, vision and speech applications. These models are computationally challenging because they require 100s of petaFLOPS of computing capacity for training and inference. In this talk, Kunle Olukotun will describe how the evolving characteristics of foundation models will impact the design of the optimized computing systems required for training and serving these models.

Bio

Kunle Olukotun is the Cadence Design Professor of Electrical Engineering and Computer Science at Stanford University. Olukotun is a pioneer in multicore processor design. He founded Afara Websystems to develop high-throughput, low-power multicore processors for server systems. Olukotun co-founded SambaNova Systems, a Machine Learning and Artificial Intelligence company, and continues to lead as their Chief Technologist. Olukotun is a member of the National Academy of Engineering, an ACM Fellow, and an IEEE Fellow for contributions to multiprocessors on a chip design and the commercialization of this technology. He received the 2023 ACM-IEEE CS Eckert-Mauchly Award.

Keynote and Industry Speakers

Northeastern University Speakers

Agenda

.avif)

%20circ.avif)

.avif)

.avif)

.avif)

-p-800.avif)

.avif)