Explainable AI in Computer Vision

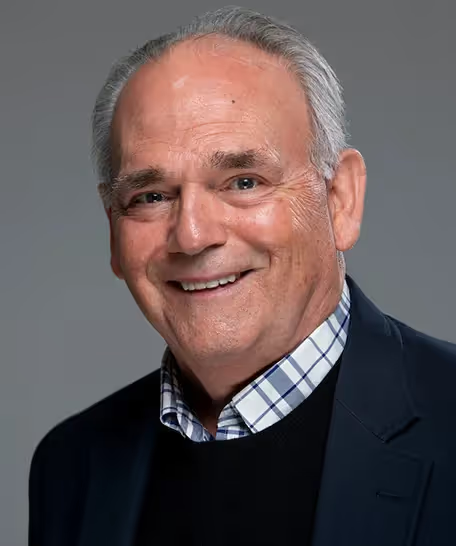

Agata Lapedriza, a principal research scientist at our Institute, presented her Expeditions in Experiential AI Seminar "Explainable AI in Computer Vision" on Wednesday, May 15, 2024 at Northeastern University's Curry Student Center and online.

Abstract

The goal of Explainable AI is to make AI systems and their decisions transparent and comprehensible to humans. This involves deploying different methodologies and techniques that provide insights on how the AI models work. For example, how they represent the information and how they establish the connections between the inputs and their corresponding outputs. In this talk, Agata will focus specifically on Computer Vision. She will briefly review the history of this field and will present different approaches that she have explored, such as Unit Interpretability and Class Activation Maps. Agata will show examples of Explainable AI applied to object recognition, action recognition, place categorization, and face classification. Finally, she will discuss how Explainable AI can be used to reveal bias in the data that has been encoded in the model during training.

Flip through Agata's slides here:

Bio

Agata Lapedriza is a Principal Research Scientist at Northeastern University (Institute for Experiential AI) and a Professor at Universitat Oberta de Catalunya. She is also an Affiliate Professor at Bouvé College of Health Sciences at Northeastern University and a Research Affiliate at Massachusetts Institute of Technology (MIT) Medialab. Her research focuses on Human-centered AI, with an emphasis on developing fair and reliable systems that can perceive and understand human behavior, expressions, and contextual information. She specializes in use cases related to Social Robotics and AI for Health and Wellness. Her expertise covers different topics, including Computer Vision, Language Models, Sensor Data, Affective Computing, and Responsible AI. From 2012 to 2015 she was a Visiting Professor at MIT CSAIL, where she worked on Computer Vision and Explainable AI. From 2017 to 2020 she was a Research Affiliate at MIT Medialab, where she worked on Emotion Perception, Emotionally-aware Dialog Systems, and Social Robotics. In 2020, she spent one year as a Visiting Faculty at Google (Cambridge, USA) and, more recently (2022-2023), she worked as a part-time contractor at Apple Machine Learning Research.

Keynote and Industry Speakers

Northeastern University Speakers

Agenda

.avif)

%20circ.avif)

.avif)

.avif)

.avif)

-p-800.avif)

.avif)