Watch Now

Abstract

Most scientific fields are adopting AI exponentially. During this seminar, Arvind Narayanan will argue that this adoption has been hasty and comes with systemic risks including the proliferation of errors such as leakage. It may also shift the priorities of scientists from explaining the world to creating predictive models that only provide an illusion of understanding and often underpin ethically dubious applications.

To realize the benefits of AI in science and to maintain credibility in the scientific enterprise, a course correction is needed. Scientific fields should adopt AI in a slower and more deliberate fashion so that they can make the necessary changes to scientific practice and epistemology. He will present a few specific ideas to improve the rigor of research. Finally, the ambition of “automating science” is too grandiose, but specific tools for automating repetitive tasks can help scientists.

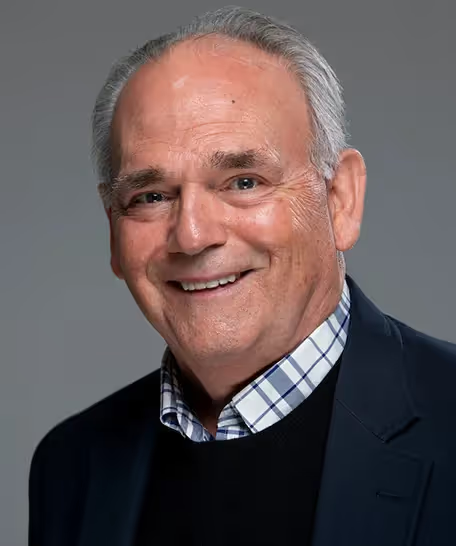

Bio

Arvind Narayanan is a professor of computer science at Princeton University and the director of the Center for Information Technology Policy. He is a co-author of the book AI Snake Oil and a newsletter of the same name which is read by 50,000 researchers, policy makers, journalists, and AI enthusiasts. He previously co-authored two widely used computer science textbooks: Bitcoin and Cryptocurrency Technologies and Fairness in Machine Learning. Narayanan led the Princeton Web Transparency and Accountability Project to uncover how companies collect and use our personal information. His work was among the first to show how machine learning reflects cultural stereotypes. Narayanan was one of TIME's inaugural list of 100 most influential people in AI. He is a recipient of the Presidential Early Career Award for Scientists and Engineers (PECASE).

Keynote and Industry Speakers

Northeastern University Speakers

Agenda

.avif)

%20circ.avif)

.avif)

.avif)

.avif)

-p-800.avif)

.avif)