Is 99% good enough? The importance of Adversarial Attacks in AI Models

.avif)

Adversarial attacks are deliberate manipulations of data designed to induce undesired behavior in machine learning models. Evasion attacks, a specific type of adversarial attack, focus on modifying input data in such a way that it bypasses security measures or detection systems, allowing malicious activities to go undetected or unchallenged.

Machine learning models are incredibly effective, often achieving 99% accuracy or higher in real-world applications, whether it's diagnosing diseases, detecting fraud, or predicting market trends. This high level of performance makes them appealing in industries that demand precision. However, this “near-perfect” accuracy can mask critical vulnerabilities, especially when considered in light of safety standards like IEC 61508.

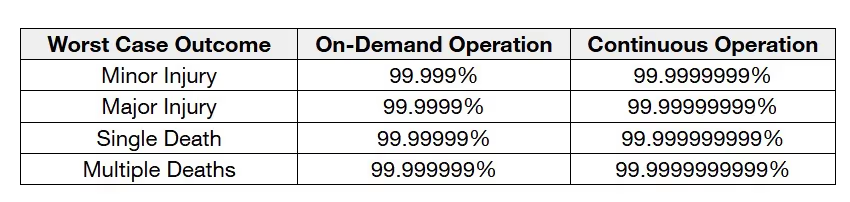

IEC 61508 is an international standard for the functional safety of electrical, electronic, and programmable electronic systems. Table 1 details these regulatory standards, assuming that continuous models operate once per second. IEC 61508 places a strong emphasis on ensuring that safety systems achieve a high level of reliability and risk mitigation. In safety-critical industries such as healthcare, manufacturing, and transportation, even a 1% failure rate can lead to catastrophic consequences. A machine learning model’s 99% accuracy, while impressive, can still mean that 1% of predictions are wrong—and in high-stakes environments, that 1% could be the difference between life and death.

Examples

To further illustrate the risks posed by even hypothetical adversaries, several cross-disciplinary examples are provided below.

- Education – How many words do I need to change before your plagiarism detection tool is useless?

- Finance – Can I write a check for $1000 that the machine classifies as $9999?

- Government – Can I manipulate voting data in a way that bypasses fraud detection models and distorts election outcomes?

- Healthcare – Will your MRI diagnostic imaging model be resilient to single-pixel failures?

- Housing – Can I manipulate property valuation algorithms to inflate home prices or deflate them in my favor?

- Policing – Can I alter surveillance footage or sensor data to mislead predictive policing algorithms and avoid detection?

- Telecom – can I craft a message that bypasses your spam filter and reaches the inbox, even though it's actually malicious?

Adversaries as the Worst Case

Adversarial analysis is critical for understanding the true robustness of your machine learning model, particularly in high-risk or highly-regulated domains. While test set accuracy- often exceeding 99%- shows how well a model performs in laboratory conditions, adversaries assume the worst-case scenario. By building models that are robust to intentional attacks, we can guarantee performance in the presence of more mundane adversaries like typos, noise, and sampling bias. Adversarial analysis not only provides a tool to examine the worst case scenario, but allows us to make informed decisions about model architecture, hardware choice, and cost aware analyses of popular defense strategies.

Not Safe from Hackers—Just Safe

Rather than prepare the model for a cliched hacker in a hoodie, we must prepare our models for sensor failure, noise, dust, rain, or any other scenario that might be just outside of our laboratory conditions. Adversarial analysis can also be used to accelerate the development of better models.

So, one question remains:

Are you sure we can’t break your model?

If you don’t know for a fact that the answer is “no”, then the answer is almost certainly “yes”.

If you’re not sure, contact us.

.avif)

.avif)