The Best of Both Worlds: Walid Saba on a New Way to Model Language

Contrary to popular belief, Large Language Models (LLMs) like ChatGPT are not models of language but of patterns in language. These statistical regularities are stored as weights on connections between millions of small mathematical functions or “neurons.” Lift the hood on any language model and you won’t find any words, syntax trees, or concepts, just numbers.

For Walid Saba, it makes sense that LLMs would come with so many limitations, why they struggle with things that humans take for granted — things like planning, analogical reasoning, common sense, plausible inference, co-prediction, and intension.

Saba is a senior research scientist at the Institute for Experiential AI. As part of the Expeditions in Experiential AI Series, he made the case for what he calls, “bottom-up reverse-engineering of language at scale” but in a symbolic setting. What does that mean? Well, to answer that, you have to know a bit of AI history.

GOFAI

The traditional approach to AI, sometimes called “good old-fashioned AI” (GOFAI), made use of symbolic or semantic systems to structure meaning based on concepts, semantic networks, and linguistic rules. It was a top-down approach that leveraged logic, theory, and linguistics to build language models that could grasp the meaning behind words and their pattern of use. Because top-down approaches require a generally agreed upon set of principles to start from, these theory-driven approaches did not scale.

However, bottom-up approaches that do not assume any theory, merely trying to determine from text data how language works, could go much further than anyone ever imagined. The idea was based on word embeddings — vectors that approximated the meaning of words by the contextual patterns where a word usually occurs.

“The brilliance of this idea is that meaning can be used as a mathematical object that computers understand,” Saba said. “You can now compute with meanings. Before that, they were just characters or symbols. Now I have a mathematical object that I can compute with.”

While symbolic methods suffered from what’s called the “symbol grounding problem” — namely, how symbols get their meaning and where the meaning come from — the empirical, bottom-up approach represented by artificial neural networks (ANNs) solved the problem by approximating the meaning of a word based on how it's used in language. It was such a wonder for predicting patterns of language that the GOFAI approach was all but abandoned. In its place was a new, bottom-up, statistical methodology that aimed to “see what the data tells us.”

While the new approach was enormously successful at predicting patterns in language — and still is — its cracks were shown early on its failure to exhibit the kind of “understanding” that you would expect to find in an artificially intelligent system.

Cracks in the Foundation

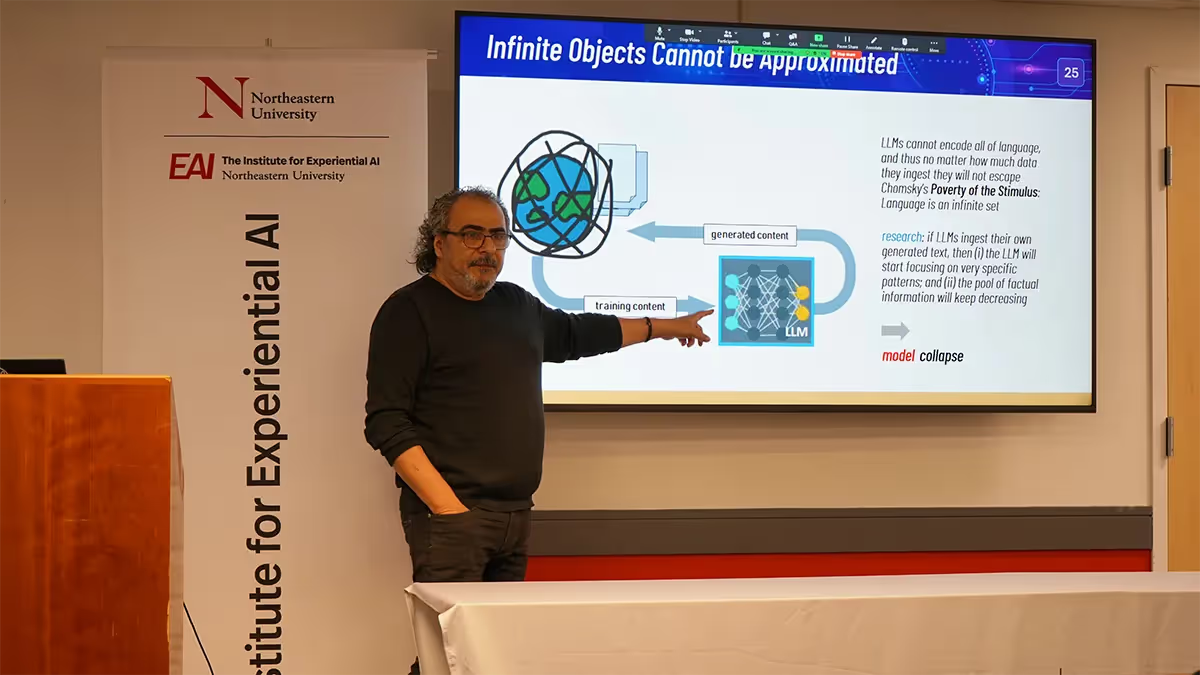

With LLMs, there is no ground truth or facts on which they operate, which is pretty much antithetical to the way human beings learn and develop language. They require lots and lots of language data — most of it pulled from the internet — so the language they produce will always be at the mercy of training data, rife as it is with toxicity, bias, error, etc. To complicate things, words in a large language model are scattered and distributed across the network as millions and millions of weights. That makes computations unexplainable by design. As Saba put it, “In a neural network, the original components of the computation are gone, lost, so explainability is hopeless.”

And the sheer amount of data required to train a model remains enormous. GPT-4, for example, had to ingest a corpus of several trillion words or tens of millions of books. If a child had to process the same amount of data to learn to speak it wouldn’t do so until it was 160,000 years old. Clearly, human language is doing something right, and if Saba is correct, the problem with LLMs cannot be fixed by simply adding more data; the problem is paradigmatic.

Not so the GOFAI approach. Because symbolic, rationalist approaches to AI are built on systems of understanding, they can always refer back to a given premise or factual basis for potential correction — much the same way people learn. However, as Saba pointed out, the top-down approach has its own drawbacks in that it is always tied to theories about how language works, and those can always be refined or thrown out altogether. Here, the empirical, bottom-up style of modern LLMs could be retained to build an approach that offers the best of both worlds.

“The reason we got here is not because of neural networks,” Saba said. “It's because of the bottom-up reverse engineering strategy that says, ‘let's see what the data tells us.’ If we do that in a symbolic, semantic, and cognitively plausible way then we get the best of both worlds. We discover what language is all about but symbolically and not statistically and without any reference to knowledge and common sense.”

LLMs in their current state are wonders of language prediction, but anyone who has used ChatGPT will quickly discover its shortcomings. When deployed in the real-world, those shortcomings can cause real harm. Maybe it’s time for a new approach, one that models itself after the most efficient language learning mechanism we know of: the human brain.

After his talk, Walid stuck around to answer questions from the audience. Read his responses here. You can watch Walid’s full talk here.

Learn more about Saba’s research here or read about his AI philosophy here. The Institute for Experiential AI engages in all kinds of AI research but always with a human-centric approach. Learn about our core focus areas here.