A Make or Break Year for Responsible AI

In a breakout year for AI, many companies who failed to adopt a Responsible AI (RAI) framework suffered serious consequences — be it in the form of hasty top-level decisions, lost revenue streams, or bad PR. For organizations seeking a less reactionary, more comprehensive approach to AI, the Responsible AI Practice at the Institute for Experiential AI offers a slate of strategic RAI offerings: Maturity Assessment and Action Plan; Governance; Assessment; Advisory; and Training.

Tailored to meet organizations where they are, our RAI Practice provides a key advantage over the generic dashboards and plans offered by many consulting firms. The RAI Practice leverages the unique position of our AI ethics and AI science experts, who transcend academia with their rich industry experience and refined expertise. Our RAI Practice partners with all industries to audit AI systems, implement Responsible AI governance strategies, develop and deploy safe and robust AI products, and mitigate AI risks while enhancing value and meeting regulatory obligations.

“The RAI Maturity Assessment and Action Plan is the first thing we recommend to partners who come to us and don’t know where to start with Responsible AI. It offers a quick way to know where your organization stands today and highlights what actions are needed to meet the shifting standards of the moment.”

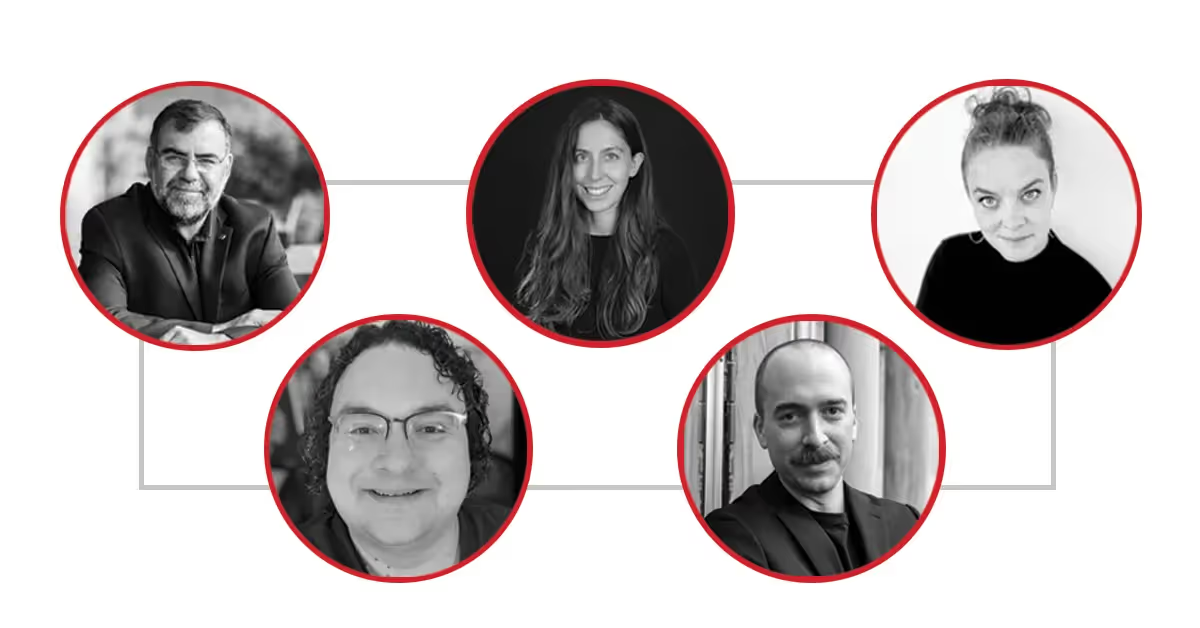

- Matthew Sample, AI Ethicist

“RAI Governance is the scaffolding that holds everything together for Responsible AI projects to take place efficiently, effectively, and in a sustainable manner. We structure the organization’s RAI strategy based on their priorities and values and build what I call the playbook — the guidelines, tools, and everything else you need to establish a process where every AI system goes through a RAI workflow, with clear roles and responsibilities across the organization.”

-Cansu Canca, Director of Responsible AI Practice

“The RAI Assessment helps you get ahead of the game. It’s about understanding how your machine learning systems are behaving and what kind of impact they’re having on your users. We also look at the processes you already have in place so you can catch problems before they grow and ensure you’re where you need to be when the next law comes down the pipeline. That customization is a key part of our offering.”

-Tomo Lazovich, Senior Research Scientist

"We created the AI Ethics Advisory Board because internal AI ethics boards represent a conflict of interest and there aren’t enough experts that understand these issues. Organizations need to know if specific AI applications are legitimate and what their level of risk is. That's not something they require all the time (yet). That’s why we provide an expert advisory board on demand.”

-Ricardo Baeza-Yates, Director of Research

“With RAI Training, we want to create varying degrees of responsibility and skill sets within the organization. For instance, we have company-wide training to increase cultural awareness, we have targeted training sessions to create Responsible AI responders to take on responsibilities within the organization, who can flag issues and find relevant tools and guidelines, and we have executive education training for senior management to make better strategy and governance decisions. We don’t want responsible AI to happen only at the board level, because that would create a bottleneck.”

-Cansu Canca, Director of Responsible AI Practice

"Today, organizations are expected to assess the broader impact of the systems they develop and employ, as well as mitigate any unethical consequences of those systems. This demand comes not only from consumers, but is increasingly manifested in regulation that requires this to be done in a systematic, reliable and traceable manner. The challenge is, that most businesses are not prepared for this. The RAI Maturity Assessment and Action plan is designed to solve these problems by providing a thorough overview of what is needed to move forward and a clear path to achieve it, while bringing all the relevant expertise to the table."

-Laura Haaber Ihle, AI Ethicist

Explore more ways our Responsible AI Practice can help your organization navigate ethical challenges presented by AI technologies.

.avif)