Is Your Business [Actually] Ensuring Responsible AI Practices?

![Is Your Business [Actually] Ensuring Responsible AI Practices?](https://cdn.prod.website-files.com/657141b092d019b05ff5788e/6747666fe97f77a9691cc765_November%20%2724%20newsletter%20top%20graphic.avif)

At this stage of AI adoption, the technology is transforming operations and driving innovation – and creating critical ethics challenges for organizations. From racial bias in health algorithms to hiring platforms favoring male applicants, the risks are real. It might seem like the solution is simple: debias the algorithms, and the issue is resolved.

Not so fast, says Cansu Canca, director of Responsible AI Practice at the Institute for Experiential AI.

“Bias is significant and complex. Addressing bias and fairness isn’t a one-size-fits-all solution,” Canca explains. “It’s not just about correcting algorithms or using representative datasets. What fairness means in healthcare, finance, insurance, or hiring processes can vary greatly. For example, fairness in insurance might look entirely different from fairness in acute care or decision-making during a pandemic. Tackling these challenges requires a comprehensive approach—from establishing strong organizational governance and evaluating AI projects/products for bias (and many other equally critical ethical concerns such as privacy, transparency, and agency) to effectively training teams, assigning roles and responsibilities, and beyond."

The key is a proactive, comprehensive approach to Responsible AI.

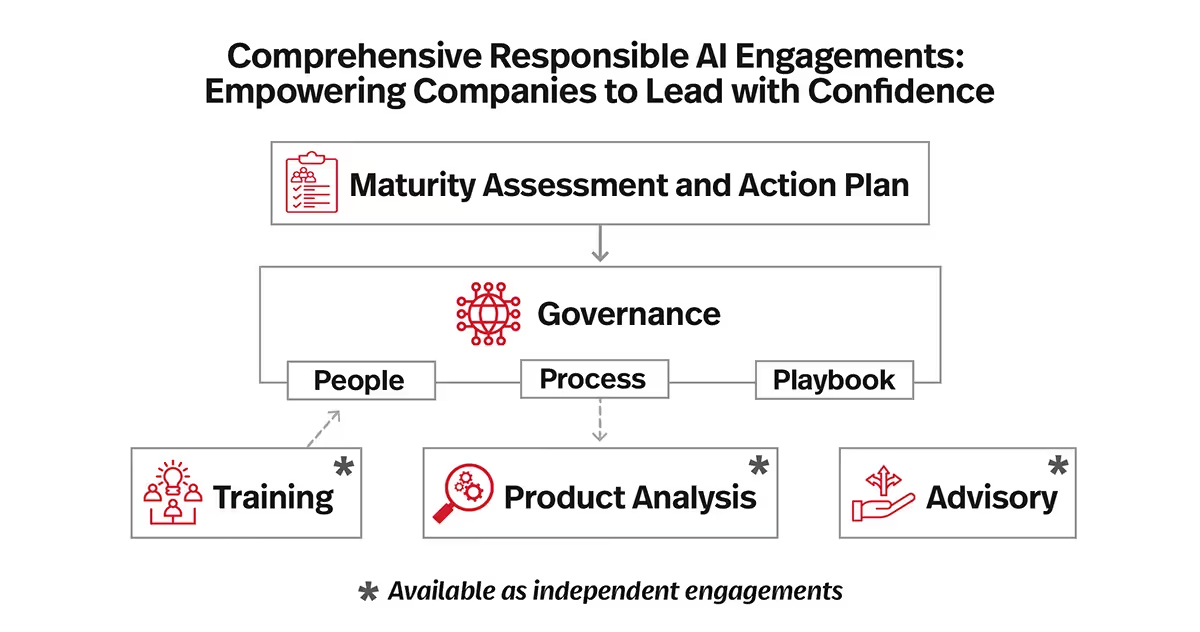

Our Responsible AI Practice supports industry leaders like Verizon in navigating these complexities, providing tailored and proven solutions at every stage of their Responsible AI and data adoption, including:

Maturity Assessment and Action Plan: Often an ideal first step, this evaluation provides a comprehensive analysis of an organization's readiness and practices in Responsible AI (RAI). It identifies gaps in governance—covering people, processes, and playbooks—and offers clear recommendations on what to build, buy, or implement to achieve sustainable RAI integration.

Governance (People, Process, Playbook): Before integrating RAI tools or procedures into existing workflows, companies must first establish strong governance foundations. Our experts examine the key elements including company culture, values, processes, and principles, delivering customized guidance, scorecards, roadmaps, and more. The opposite of an off-the-shelf solution!

Product and Projects Analysis: Through rigorous evaluations of AI products and projects, our experts help partners understand the impact, mitigate risk, ensure compliance, and avoid creating harm and reputational damages.

Training: Tailored courses to equip organizations, executives, managers, and employees with skills and knowledge to effectively integrate RAI practices organizations, fostering a culture of scalable, responsible innovation and growth.

Advisory: From an on-demand, independent AI Ethics Advisory Board to bespoke one-on-one consultations, our RAI practice provides unbiased guidance, supporting responsible AI development and deployment at every stage.

While each of these offerings complement ongoing AI projects, they all stress the importance of integrating ethics into the very process of innovation effectively and efficiently. Collaborations must begin with the understanding that Responsible AI is not an afterthought, but a foundational element of successful AI.

.avif)

.avif)