What Is Common Sense? An AI Perspective from Ron Brachman

What is common sense? Ralph Waldo Emerson called it “genius dressed in working clothes.” Somerset Maugham said it’s just another name for the “thoughtlessness of the unthinking.” Computer scientist Yejin Choi likened it to the “dark matter of intelligence,” referring to the unspoken knowledge we all seem to share.

Whatever common sense is, it’s increasingly clear AI doesn’t have it. In many ways, AI is, to quote Yejin Choi, “shockingly stupid.” Perhaps even worse, it’s unexplainable. Sophisticated image recognition tools mistake school buses for ostriches. Self-driving cars stop if they see a photograph of a stop sign on a billboard. Alexa once challenged a 10-year-old to plug a phone charger halfway into an electrical outlet and touch a penny to the prongs.

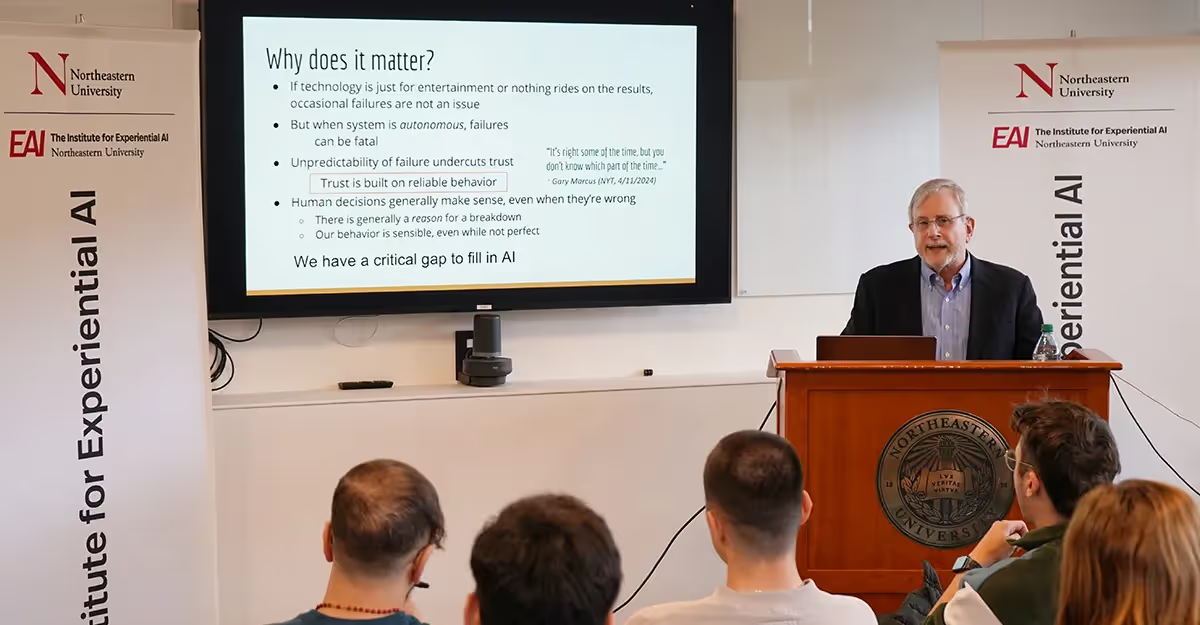

The idea of trustworthy AI is simply not in the cards as long as systems behave this way, so for Ron Brachman, we need to go back to the drawing board to understand what exactly common sense is, and how we might begin to go about recreating it in machines.

Brachman is the director of the Jacobs Technion-Cornell Institute at Cornell Tech, and as part of a Distinguished Lecturer Seminar hosted by the Institute for Experiential AI, he made the case that common sense is, ironically, not so commonly understood. By his estimation, it is all of the following: broadly known, obvious, simple, based on experience, practical, and generally about mundane things. To put it in psychology terms, common sense is halfway between the System 1 and System 2 model of thinking devised by the late Daniel Kahneman in his book “Thinking, Fast and Slow.”

Critically, however, common sense is not simply a collection of facts about the world. As Brachman defined it, “We might arguably say that common sense is the ability to make effective use of ordinary, everyday experiential knowledge and achieve ordinary practical goals.”

When it comes to artificial intelligence, however, any attempt to build common sense will have to be at the foundational level. AI systems need to have good reasons for what they do, which means imbuing them with a sense of intent. To mature and evolve, it must also be amenable to change when humans disagree with its conclusions. Architectures should be able to isolate a mistaken belief or objective and change it accordingly. Moreover, such beliefs should govern the behavior of the entire system.

“If we see that the system has a belief that we think is false, we should be able to explain an alternative belief,” Brachman explained. “And the system ought to be able to adopt the new belief and change its action based on it.”

Easier said than done, of course, but this focus on common sense was core to what’s sometimes derogatorily referred to as “good old fashioned AI” (GOFAI), with AI pioneer John McCarthy highlighting its significance way back in the 1950s. The approach also seems to favor the symbolic methods of yesteryear. (How AI researchers went off course might be explained by the success of bottom-up statistical modeling in the development of neural networks.)

In any case, most would agree the lack of common sense in AI is a major problem. To change course, Brachman argued, will first require a clear definition of what common sense is—namely, that it is not merely an accumulation of facts but a system of beliefs and goals derived in part from experience.

“Life is not a gigantic, commonsensical Jeopardy game, where you just give an answer or question to an answer, so to speak, and then you move on to something completely different,” he explained. “It's about the appropriate and timely use of all those things in the right setting. To say that something has common sense, I think we need to go far beyond the statement that we know an awful lot of common sense facts and rules.”

Watch Ron Brachman’s full talk here and stay tuned for Brachman’s answers to audience questions.